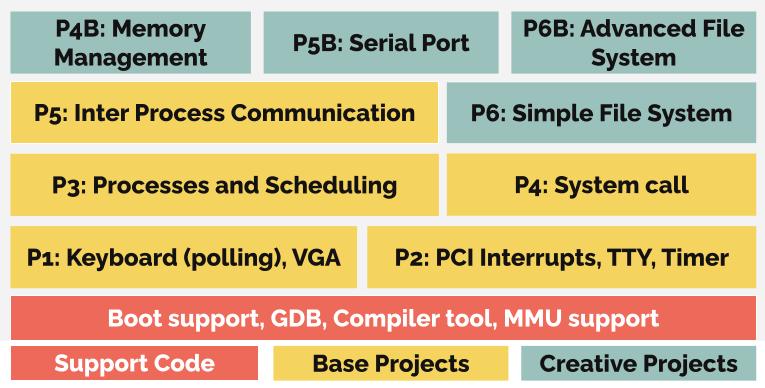

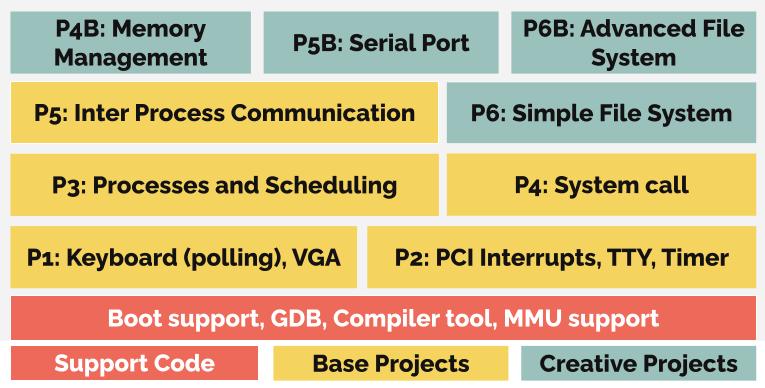

Projects

Operating System Pragmatics

- Description

- This project is designed to provide students with hands-on, practical experience in applying the operating system principles learned in CSC 139, ensuring they develop a solid and comprehensive understanding of the design and implementation challenges involved in modern operating system development. I am currently updating these iterative projects to align with the latest hardware advancements. The project will cover key areas such as the project environment, hardware drivers, interrupts, CPU scheduling, memory management, and file systems.

- Project Slides

- Hardware/Simulation: QEMU (x86, aarch64), ARM

- Cloud Platform: Chameleon Cloud

- Software: C, Shell, Linux (Mint), GNU Compiler, KVM

- Skills: Bash, C, make, Container

- Update

- October 2025: Currently developing the yield functionality and running the operating system on a Raspberry Pi 5.

- September 2025: Implemented serial port communication for Project Phase 5B.

- August 2025: Built two departmental servers to support class requirements, allowing students to use the servers for their projects.

- April 2025: Added user/kernel mode and virtual memory management to Project Phase 6B.

- February 2025: Rebuilt SpedeVM for deployment on Chameleon Cloud (OpenStack/KVM) and Apple MacBook Air M3 (Arm64 architecture).

- November 2024: Developed a simple file system for Project Phase 6A.

- October 2024: Implemented virtual memory management and user mode for Project Phase 3B.

LLM Serving with Edge Devices

- Description

- The proliferation of IoT devices and the exponential growth in data generation have rendered Machine Learning (ML) training and serving systems at the network edge of paramount importance. The deployment of ML models at the edge offers real-time decision-making, minimized latency, enhanced data privacy, and localized intelligence. However, the limited computational power, memory, and constrained energy budgets on edge devices hinder the deployment of sophisticated ML models, particularly large language models.

- This research focus on developing system and middleware solutions for exploiting the ability of edge computing for ML model serving systems and distributed model training frameworks.

- Specifications:

- Hardware: Jetson AGX Orin (x3) and Jetson Orin Nano 8GB (x3)

- Software Stack: KServe, Kubernetes, Container

- Skills: Serverless, Python, Large Language Model

- Update

- I am playing vllm serving system right now.

Video To LLM

- Description

- The rapid growth of Internet of Things (IoT) devices has amplified the demand for real-time stream processing at the network edge, a critical enabler for smart cities, self-driving cars, online gaming, virtual reality, and augmented reality. However, existing edge stream processing systems and Kubernetes infrastructures rely on centralized management, creating bottlenecks in scalability, fault tolerance, and resource efficiency.

- This project proposes a heterogeneous serverless edge stream processing engine to address these challenges by leveraging the Function-as-a-Service (FaaS) model. The engine supports diverse distributed edge applications, such as edge AI and media streaming.

- Specifications:

- Hardware: Rapberry Pi 5 (x10)/ Jetson Orin Nano 8GB (x3) / Jetson AGX Orin / Chameleon cloud

- Operating System: Ubuntu

- Software Stack: Microk8s/Kubernetes, Container, Nvidia Deepstream, OpenCV, Pytorch

- Skills: Bash, Java, C++, Python